Getting started with Ollama and Web UI

Learn how to run Ollama with a sleek Web UI using Open WebUI and Docker. This guide walks you through the setup so you can interact with local LLMs right from your browser—no more terminal commands required. Fast, simple, and beginner-friendly.

If you've already installed Ollama or you're familiar with what it does, the next step is making it more accessible—especially if you'd rather interact with models in a browser than a terminal.

This guide walks you through getting a Web UI set up for Ollama in just a few minutes. We'll use Open WebUI, a popular front-end that works out of the box with Ollama.

Web UI Prerequisites

Before we begin, make sure you’ve got the following:

- Ollama installed and running.

- Docker installed (for the simplest Web UI setup).

Installing Web UI

If Ollama is already running on your computer, you can run:

docker run -d \

-p 3000:8080 \

--add-host=host.docker.internal:host-gateway \

-v open-webui:/app/backend/data \

--name open-webui \

--restart always \

ghcr.io/open-webui/open-webui:mainThis command will:

- Pull the Open WebUI image

- Create a persistent data volume

- Map the Web UI to

http://localhost:3000 - Ensure the container restarts automatically

Give it a minute or two to complete the setup.

What is Docker Doing?

If you're new to Docker: it's a platform that lets you run isolated applications in containers. In this case, Docker runs the Web UI in its own environment, with everything it needs bundled inside. This means you don’t need to install or configure any additional dependencies—everything just works.

Using the Ollama Web UI

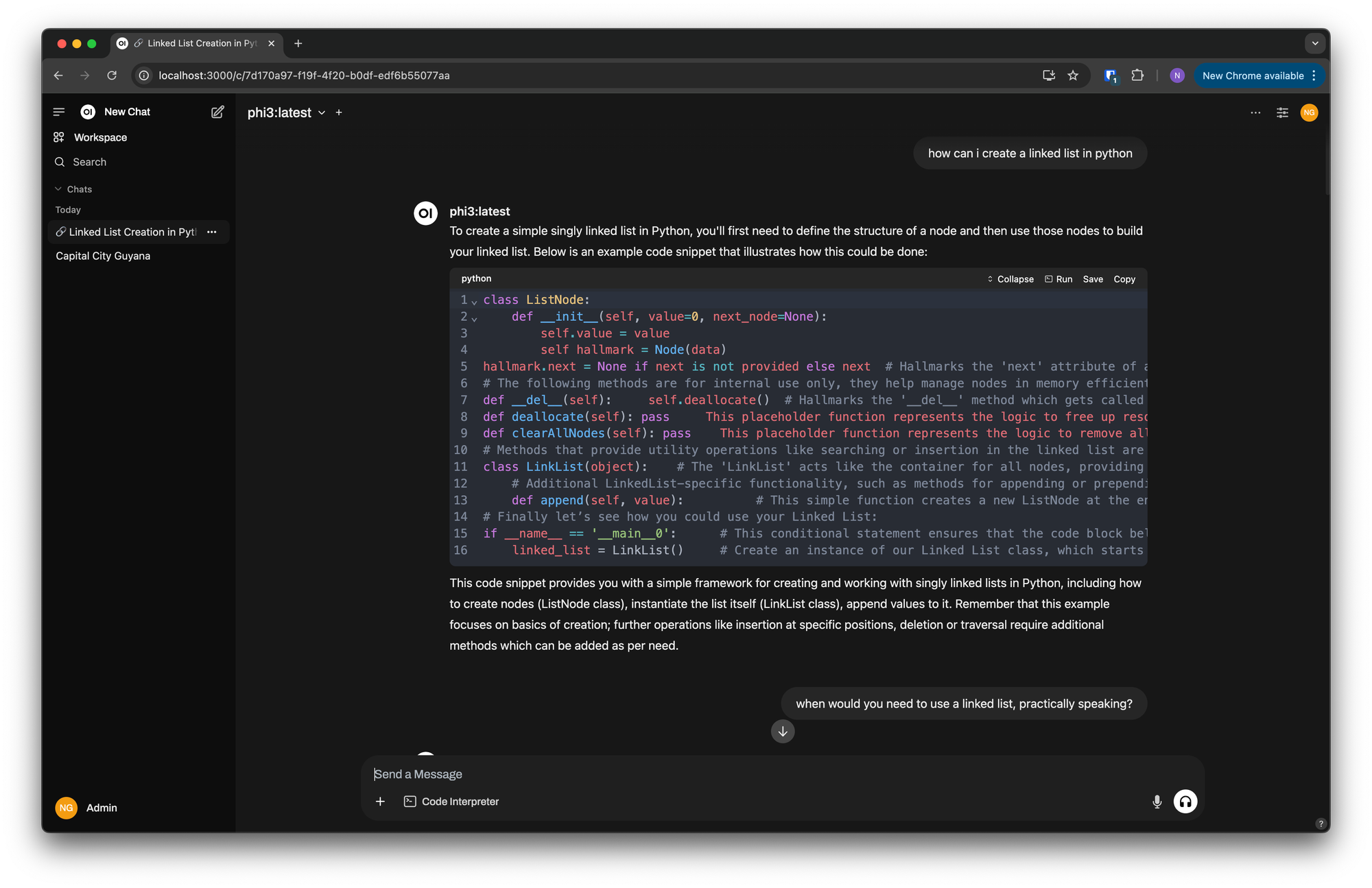

Once the container is running, open your browser and navigate to: http://localhost:3000/.

You should see a familiar chat-style interface, similar to ChatGPT. From here, you can interact with your local Ollama models in real time.

Switching Between Models

Web UI makes it easy to switch between models. At the top of the page, you'll find a dropdown menu where you can select any model you've installed with Ollama.

Be sure to visit the Ollama model page to keep up-to-date on new releases.

Final Thoughts

With Ollama and Open WebUI, you get the power of local language models and the convenience of a web-based chat interface. Whether you're experimenting, building apps, or just exploring what's possible with local AI, this setup is fast, lightweight, and easy to use.